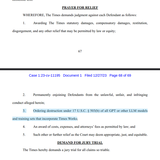

("Ordering destruction under 17 U.S.C. § 503(b) of all GPT or other LLM models and training sets that incorporate Times Works")

Hello friends, for the world of Artificial Intelligence, the new year 2024 has started quite interestingly.

At the end of last 2023, NYT started a lawsuit against OpenAI with several specific demands.

One of NYT's demands, recorded under item 3 of their demands, is the destruction of, I quote: "all GPT or other LLM models".

The other demands, of course, are for a lot of money... Ha ha ha, it's clear, right?

In the lawsuit, NYT accuses OpenAI of using copyrighted articles from NYT in the training of ChatGPT without proper permission or compensation.

The official lawsuit was initiated by the plaintiff NYT after Unsuccessful Negotiations with OpenAI.

However, comments from OpenAI make it clear that before filing the lawsuit, months of unsuccessful negotiations for a licensing agreement between OpenAI and NYT had taken place.

OpenAI shares that back in the summer, they expressly provided to publishers including NYT, I quote: "a content opt-out mechanism".

A mechanism that was expressly accepted by NYT in August 2023.

According to this mechanism, publishers can submit information to OpenAI to exclude content that is their intellectual property from being used for training ChatGPT or other Artificial Intelligence LLM models.

What exactly does LLM models mean?

By "LLM models" we mean "Large Language Models".

Decoded and translated, these are large artificial intelligence models specialized in language processing.

These models are trained on huge amounts of text data and can perform various tasks related to natural language processing, such as generating text, understanding language, translations, and answering questions.

ChatGPT, which is developed by OpenAI, is an example of such an LLM model.

On the link on the podcast site Spirit in a Shell: Podcast with Lupo you can find the full text of the NYT lawsuit against OpenAI: NYT_Complaint_Dec2023.pdf

The more interesting part, however, is that on January 8, 2024, on the OpenAI blog, there is an official response to the allegations of NYT.

The title of the blog post is: "OpenAI and Journalism".

You can read the original full text of the response from their post, here on the podcast below I will briefly summarize what is discussed in this response:

Quoting:

"Our goal is to develop Artificial Intelligence tools that help people solve problems that would otherwise be unattainable."

People around the world are already using our technology to improve their daily lives.

Millions of developers and more than 92% of the Fortune 500 companies today develop based on our products.

Although we disagree with the claims in the "New York Times" lawsuit, we see it as an opportunity to clarify our business, our intentions, and the way we build our technology.

Our position can be summarized in the following four points, which we clarify below:

- We collaborate with news organizations and create new opportunities.

- The training is within fair use (fair use), but we provide an opt-out mechanism (opt-out) because it is the right action.

- "Repetition" is a rare problem that we are working to reduce to zero.

- "The New York Times" does not tell the whole story."

Friends, for everything to be crystal clear, here I would like to start with the explicit explanation of the terms from item 2:

The training is within fair use (fair use), but we provide an opt-out mechanism (opt-out) because it is the right action.

I explain both terms in detail because I personally had to read about both explicitly to be sure I understand exactly what is at issue ... :)

1. "Fair Use":

This is a legal term, especially in the context of American legislation, which allows limited use of copyright-protected content without explicit permission from the copyright owner.

Fair use includes, but is not limited to: criticism, comment, news reporting, and research.

In the context of AI, this specifically means that OpenAI believes that using publicly available data to train its models is legal and does not infringe copyrights.

2. "We Provide an Opt-Out":

This means that the company provides a mechanism that allows publishers to choose and indicate their content, over which they have intellectual property NOT to be used by the AI system for training.

Specifically, this choice is offered by OpenAI to publishers as part of good business etiquette and respect for the rights of content owners.

For example, if a publisher does not want their articles to be part of the database used to train AI, they can notify the company and it will remove this data from its training sets.

So, according to some self-forgetful media giants, the use of publicly available information sources is illegal?

This is the case, at least according to what I understand... OpenAI openly state that the use of publicly available information is NOT illegal.

Publicly available information... definitely, in my opinion, publicly available information CAN'T be a problem... don't you think, friends?

Publicly available information, I think, is quite normal to be assumed to be accessible to everyone, and no one should be able to sue us later for having read an article published by them? ... Right?

This is expected to be the case no matter how insane and draconian the copyright and content protection laws are considered to be...

Don't you think so too, friends, or do I not understand something, and fundamentally, am I wrong? :)

Let's now, however, address the most interesting point 4 from the claims of OpenAI:

The New York Times does NOT tell the full story

According to OpenAI:

"Our discussions with The New York Times seemed to be progressing constructively until our last communication on December 19th.

The negotiations focused on a high-value partnership around real-time display with acknowledgment in ChatGPT, where The New York Times would gain a new way to connect with existing and new readers, and our users - access to their journalism.

We explained to The New York Times that their content does not significantly contribute to the training of our existing models and also will not have a significant impact on future training.

Their claim of December 27th, which we learned about when we read The New York Times, came as a surprise and disappointment to us."

Yes, things are getting more and more interesting... quite interesting, definitely!

What becomes de facto clear...

OpenAI had been actively collaborating with NYT.

Together, they develop some mechanisms for collaboration... BUT in the meantime, they make the mistake and clearly let NYT understand that their intellectual content actually HAS no particular VALUE as a source of information.

And for this reason, it is unlikely to be used for training OpenAI's LLM models... ha ha ha

In plain words, they tell them your intellectual property, about which you are making such a fuss left and right and trumpeting up and down, is not of particular interest to us and our AI LLM models.

At least that's what I understand from the whole situation.

After the NYT realizes that OpenAI has absolutely no intention of giving them anything and won't make a cent from OpenAI, the NYT decides to bring in the heavy artillery and their slick lawyers.

On December 29th, the NYT files a lawsuit for compensation and, more importantly, for the destruction of, I quote: "all GPT or other LLM models".

Well... the "buddies" at the NYT definitely have experience and know the rules of the dirty game well.

Apparently, with the interest and progress of OpenAI, the owners of the NYT have smelled dollars and gold and most likely have no intention of backing down without at least trying to snatch a substantial fat piece of the pie.

However, there is something else very important that we need to clarify before we get to the last point in this saga

Officially, according to OpenAI, as well as according to most active professional users of ChatGPT, the examples cited by the NYT of alleged repetition and quoting of parts of NYT articles by ChatGPT are impossible.

The opinions of all GPT experts are the same: the NYT shamelessly lies and manipulates the information they present.

They do NOT give full and complete access to the entire prompt, do not provide access to the session history and instructions, and the history of the specific communication they have with ChatGPT, before the AI model begins to almost verbatim repeat parts of their articles.

To achieve such a form of "quoting" and "repeating" the AI model definitely needs to be pre-instructed to do so.

Are These More Manipulations and Shallow Lies by the NYT?

Most likely yes...

Personally, I think this whole loud legal case is just another legal farce.

Much ado about nothing...

Friends, to explain once and for all why all this is happening, we definitely need to understand who exactly is the owner hidden behind the lawyers of the plaintiff NYT...

WHO is actually behind the NYT? Who owns the NYT?

The "New York Times" company, which publishes the "New York Times" newspaper, is currently owned and controlled by the Sulzberger family, which has owned it for several generations.

The Sulzberger family is a rather wealthy and famous Jewish clan from the backstage elite ruling the USA for decades...

The current chairman of the "New York Times" company and publisher of the newspaper is A. G. Sulzberger.

He is the sixth member of the Ochs-Sulzberger family to hold the position of publisher since the newspaper was purchased by Adolph Ochs in 1896.

The company operates with two types of shares, where Class A shares are traded publicly, while Class B shares are held privately.

Friends, keep in mind that Class B shares are those shares with real voice and rights in the management of the company.

The Sulzberger family owns the majority of these Class B shares, which gives them significant control over the company.

A. G. Sulzberger personally owns only 1.4% of Class A shares and a full 94.5% of Class B shares.

This structure ensures that the family retains full control, especially regarding board decisions, as Class B shareholders have the right to elect more directors to the board compared to Class A shareholders.

The "Times" has diverse revenue sources, with a significant portion coming from subscriptions.

In 2022, the company generated $2.3 billion in revenue, with 67% of it from subscriptions.

The subscription-based model has become a significant part of their business, especially with the growth of digital subscriptions.

Conclusion

Friends, it seems this loud lawsuit is another reason to seriously consider the future of Artificial Intelligence, especially this AI.

Yes, I deliberately omitted an important fact in the narration of the story that the NYT lawsuit is not only against OpenAI but also against their main investor and sponsor Micro$oft.

Honestly, I don't care at all about Micro$oft and roughly the same goes for OpenAI... a raven doesn't pluck out another raven's eye.

Both Sam Altman and those from the Sulzberger family are of the same tribe, they will come to an understanding, and in the worst case, they will exchange a few dollars, and their caravan will continue.

In this whole conflict, in my opinion, there is something much more important.

Let's not forget that ChatGPT is an AI LLM model with entirely closed code.

Yes, all this is still another black box despite the misleading "Open" in the name of the company behind it: OpenAI.

However, I want to clarify something very important here!

I personally use ChatGPT daily in my work.

For me, ChatGPT is an extremely valuable assistant in my work.

ChatGPT is the professor expert on almost every topic that interests me, who for $20 a month is available 24 hours a day.

And for these best invested $20 of mine, I almost always get the right and accurate advice and the faithful solution to the task I am facing to solve.

I definitely don't think anyone will ever be able to stop the development and entry of Artificial Intelligence into our world.

The genie is already out of the bottle... There's NO going back!

I'm not sure it's Pandora's box, as some apocalyptic analysts express and interpret Artificial Intelligence...

Yes, it's absolutely clear that at some point the good genie from the bottle in the wrong hands used in the wrong way can turn into Pandora's box overnight... FACT!

Anyway, let's leave these theories 100% aside and get back to today's problem.

I personally don't think there is anyone today, even among the ultra-rich Jews pulling the strings in America, even among them, no one is able to stop the development and entry of Artificial Intelligence.

Even such super-rich and influential American Jewish clans as those of the aforementioned Sulzberger family, one of the many lurking in the shadows of power.

Let's Talk Again About Open Source AI LLM Models

Personally, for me, this particular case is another vivid example of how vitally and extremely important the active development of Open Source Artificial Intelligence models is.

Yes, it's clear that when it comes to open source LLM models, there can't be a word about the money and resources needed to train an LLM model like GPT-4.

But let's not forget that there are already many alternatives to GPT-4 that use technologies that require tens of times fewer resources to reach the level of ChatGPT...

So, friends, in today's episode of the podcast Spirit in the Shell we mainly looked at the complex and multilayered legal dispute between OpenAI and the NYT.

On one hand, there's the question of legality - how far exactly do the boundaries of 'fair use' extend in the context of Artificial Intelligence?

How this legal case and others like it can and will reform the legal frameworks that regulate copyright in the digital era.

On the other hand, there's the ethical aspect of authorship, which is no less important.

How will companies like OpenAI manage to balance innovation with obstacles and boundaries with respect to intellectual property?

Definitely, the laws need to be reworked and adjusted to the new realities of the 21st century.

It is necessary to find the golden mean between maintaining freedom for innovation and fairness and honesty to both sides when it comes to protecting authors' rights.

This case opens many questions about the future of AI and the media industry.

One thing, however, is quite certain and clear!

We live in times when technologies are developing at super fast paces.

It is crucial that all these intellectual property laws are reworked as quickly as possible so as NOT to be an obstacle to progress.

Clearly, the balance between innovation and the protection of intellectual property is a topic that will continue to provoke debates and shape the future of our society.

Friends, thank you for listening to the end of this podcast episode.

If you like the topics discussed here consider subscribing to the podcast on YouTube and Spotify.

Definitely leave a comment! I look forward to your comments on the topic with great interest!

I read all comments and respond to most of them!

I'm Lupo, and I wish you Luck!

And keep it fun!